Optimizing VRAM for efficient LLM performance

Without optimization, top-tier hardware still won’t deliver. Here are some useful tips to optimize your VRAM usage, helping your GPU run more efficiently and improving overall performance when working with LLMs.

As Large Language Models (LLMs) become more popular, the need to run them efficiently is growing just as quickly.

While access to powerful hardware, whether through rentals or custom setups, has become easier, true performance depends on optimizing your system, especially regarding VRAM.

In this blog, I will explain why VRAM is the core requirement for LLM inference, how model size and precision formats affect memory usage, and what to consider when choosing the right GPU.

Let’s get started!

Why is VRAM the main requirement for LLM

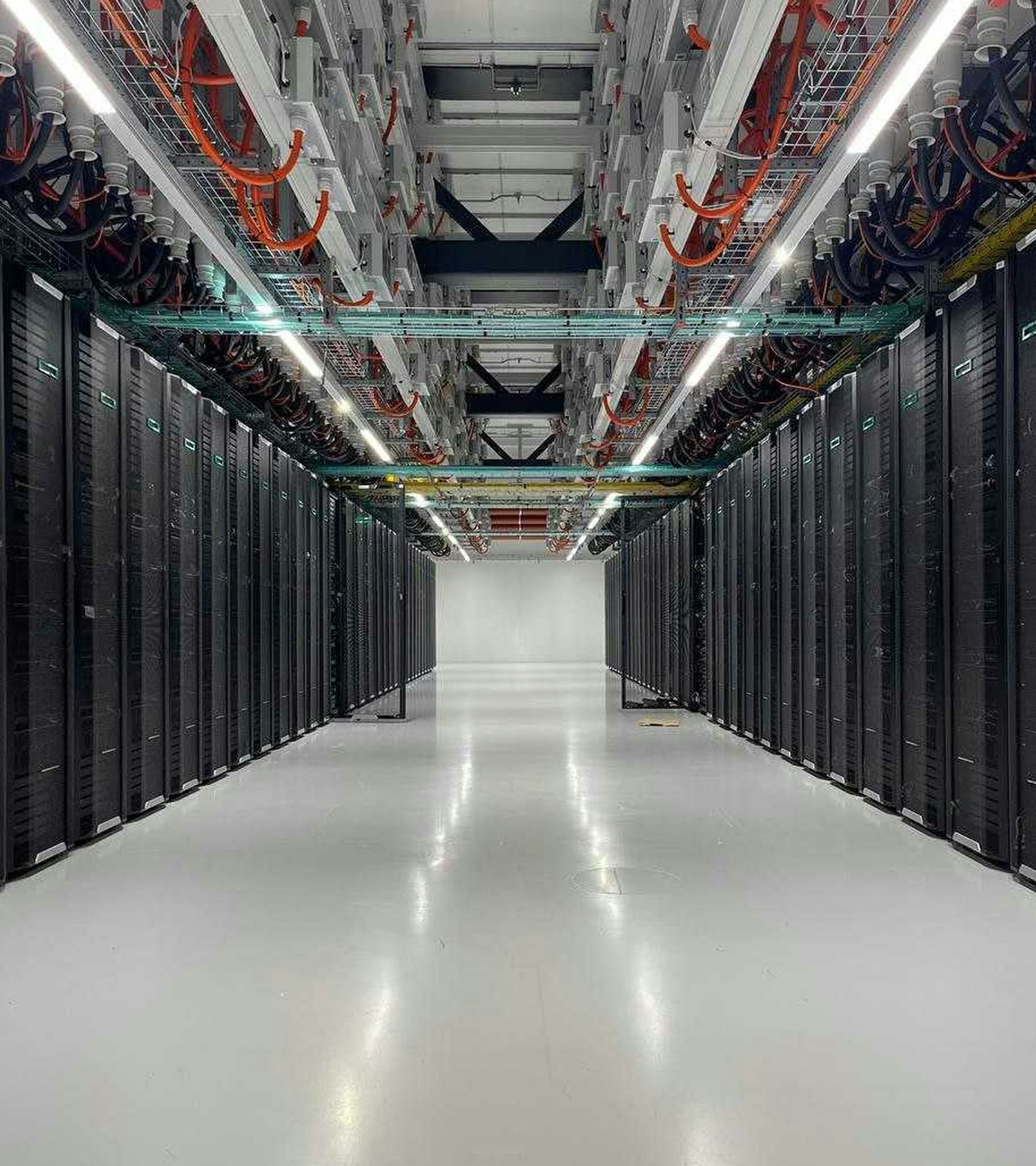

GPU is still a foundation for LLM due to its ability to perform large amounts of parallel computations. However, to truly optimize the setup, you need to consider the entire architecture.

Unlike traditional computing, where tasks can be easily transferred between system memory and GPU memory, LLMs perform optimally when the entire model fits within VRAM.

VRAM requirements for LLM follow a straightforward formula that creates a direct linear relationship between model size and memory needs:

VRAM required = number of parameters (in billions) × bytes per parameter × overhead factor

Number of parameters - parameters are representations of the learned weights of a neural network that must be stored in memory for forward and backward passes.

For example, DeepSeek-R1, released in January 2025, has 671 billion parameters, Llama 3.3 70 billion parameters, and Stable Diffusion 3.5 Large 8.1 billion parameters.

Bytes per parameter - represents how much memory each parameter consumes, which varies based on the precision or quantization level used when loading the model. The most common types are:

- FP16 (16-bit floating point) requires 2 bytes per parameter, effectively balancing accuracy and memory usage. This option is the default option for many LLMs.

- FP32 (32-bit floating point) requires 4 bytes per parameter. Due to its high memory requirements, this option is typically reserved for training phases where maximal numerical precision is vital.

- Quantized models use reduced precision to significantly decrease memory requirements. They have become increasingly popular, allowing large models to be run on consumer-grade hardware with limited VRAM: 8-bit quantization (requires 1 byte per parameter) and 4-bit quantization (requires 0.5 bytes per parameter)

Overhead factor - it accounts for additional memory usage beyond storing the model. It includes:

- Activations: temporary data generated during inference or training

- Framework-dependent memory: extra memory used by frameworks like PyTorch or TensorFlow

- KV cache: For processing context during text generation

The overhead factor varies by model type and operation:

So, let's put this to the test and calculate the VRAM requirements for the models mentioned earlier:

Transformers’ architecture and computing requirements

To understand why LLMs have such demanding memory requirements, it helps to look at their architecture.

Transformers are the foundation of most modern LLMs. They are designed to handle sequences in parallel and store large amounts of contextual information, which directly impacts how much VRAM is needed during inference.

Transformers are a type of neural network architecture that transforms or changes an input sequence into an output sequence. They do this by learning context and tracking relationships between sequence components.

For example, consider this input sequence: "What is the color of the sky?" The transformer model uses an internal mathematical representation that identifies the relevancy and relationship between the words color, sky, and blue. It uses that knowledge to generate the output: "The sky is blue."

Unlike traditional Recurrent Neural Networks (RNNs), where processes are sequences sequentially, transformers analyze entire token sequences simultaneously through three components:

- Embedding Layer - converts tokens into high-dimensional vectors that capture semantic relationships.

- Transformer blocks - (1) multi-head attention that enables dynamic weighting of token relationships through QKV (Query, Key, and Value) matrices. (2) feed-forward networks that position MLPs (Multilayer Perceptron) refining token representation

- Output head - converts final embeddings into token probabilities via linear projection and softmax

Advanced precision formats

With the new GPU architectures come new precision formats. BF16 (Brain Floating Point) is leading the way in training and fine-tuning tasking. BF16 maintains an 8-bit exponent while preserving the FP32 range, allowing reduced risks of underflow or overflow during training.

BF16 also offers a simpler conversion. Unlike FP16, which requires complex loss scaling to prevent precision loss, BF16 can directly interact with FP32 without additional overhead. This simplifies the training pipeline and improves efficiency.

New GPUs like NVIDIA A100 and H100 have tensor cores optimized for BF16 and LLM. BF16 balances the memory efficiency of 16-bit formats with the numerical stability of 32-bit formats, making it great for LLM learning models.

Quantization for inference

Precision format determines how efficiently a model runs in memory-constrained environments.

For inference, quantization has emerged as a key technique for reducing VRAM usage without significantly compromising output quality. To elaborate, quantization is the process of mapping continuous, infinite values to a smaller set of discrete, finite values.

For inference tasks, INT8 and INT4 quantization have become essential for reducing memory usage and improving throughput. Here’s how they compare:

- INT8 requires 1 byte per parameter, reducing VRAM usage by 50% compared to FP16. It’s widely supported across GPUs and balances performance and accuracy well.

- INT4 requires only 0.5 bytes per parameter, cutting memory usage by 75% compared to FP16. However, support is limited to newer GPUs like NVIDIA’s A100, H100, and RTX 40 series and requires specialized optimization to maintain accuracy. Support is also possible for older Turing architecture GPUs like the RTX 20 series and T4.

In the table below, you can see some popular GPUs and their preferred precision formats.

Final thoughts

To maximize the value of your LLM configuration, you must balance model architecture, precision formats, and GPU capabilities.

The choice of precision format affects memory consumption and directly impacts the LLM setup's computational efficiency, energy consumption, and scalability.

Whether you're building chatbots, code generators, or other AI-driven applications, investing in the proper hardware and adopting efficient memory management strategies will ensure you stay ahead in this rapidly evolving field.

Hey, you! What do you think?

They say knowledge has power only if you pass it on - we hope our blog post gave you valuable insight.

If you want to share your opinion, feel free to contact us.

We'd love to hear what you have to say!